Artificial intelligence misuse has become the most reported type of academic dishonesty violation at the University of Idaho, accounting for more than 50% of all violation reports according to Director of Student Code of Conduct Andrea Ingram. The Communication department submits the most cases, followed by the English department.

After the popular AI chatbot ChatGPT was released in November 2022, students have increasingly relied on it as a search engine replacement to aid in research, writing and even answering questions on exams.

Following its release, it took UI administrators approximately eight months to draft an AI policy. This policy asserts there is nothing inherently wrong with students using AI as a tool, unless it prevents them from engaging in a learning and creative process.

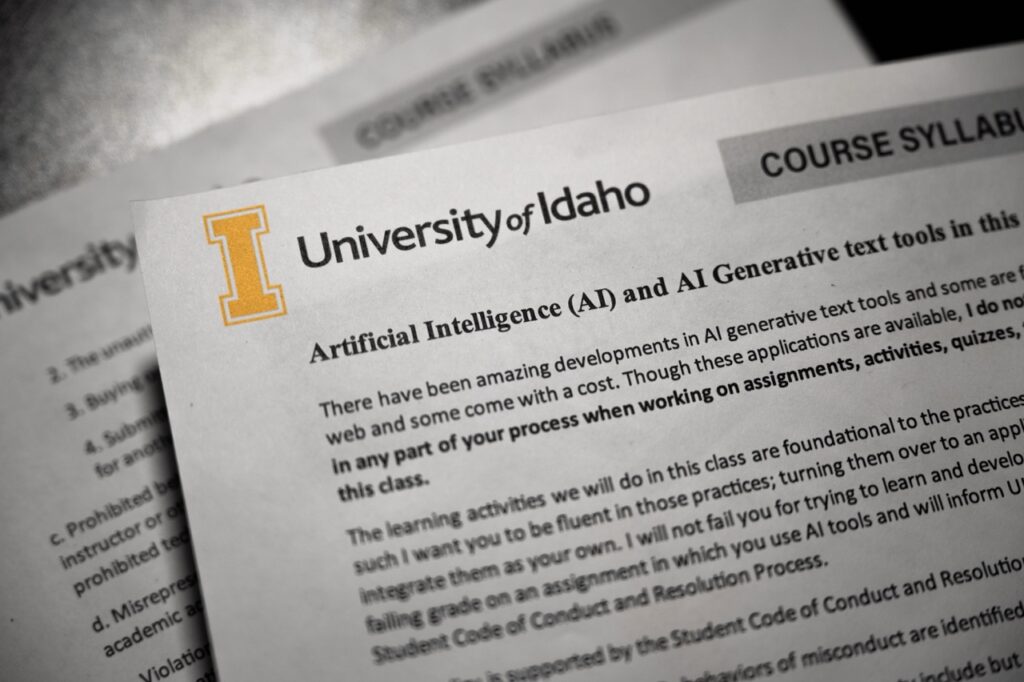

UI’s policy further allows professors to determine the extent of which AI use is allowed in their classes. If a student violates or is suspected of violating their policy, that professor will check their work with an AI detection tool, then decide to either deal with the issue one on one with the student or file a conduct report with the university.

In a 2024 survey, the Digital Education Council reported that 86% of students in higher education have used generative AI and more than half use it daily or weekly. This survey also found 69% of students use it for research purposes, as opposed to using typical search engines like Google.

UI students were interviewed at random in the ISUB for their perspective on the matter. These students reported that they were more likely to use generative AI on multiple choice assignments and discussion boards. Increasing word count, improving the writing content by making it more concise and saving time were also common reasons for students to rely on AI.

In most interviews, students recognized the benefit of doing the homework themselves, but did not necessarily see a problem with using AI to check grammar or content. One student explained that they believed it was like having a person proofread their essay.

Emma Catherine Perry, the Director of the UI Writing Center, sees a disproportionate percentage of academic integrity infractions levied against international or English as a Second Language students. Moritz Cleve, a Journalism and Advertising Professor at UI, explained these students use programs such as Grammarly to smooth over their writing for fear of being penalized for grammatical or sentence structure errors.

Perry views the writing process as the culmination of a hundred decisions, from the general themes and ideas down to the individual word choice. The use of AI to circumvent this process, including that of revision, undoes many of a student’s decisions and homogenizes the work into a product of conformity.

According to Perry, ESL students’ utilization of AI tools is an evolution of writing trends that have always had a disproportionate impact. In response, Cleve wants to see student-generated ideas and self-expression and said that the heavy use of AI is more damaging to student work than grammatical errors.

These AI tools “take some of the beauty out of their work,” said Perry.

Since the UI AI policy allows professors to decide the extent to which AI usage is permissible in their class, Cleve has taught and encouraged its safe and ethical use through assignments that require critical thought about the nature of the generated content. For this, he says that students are responsible for using and applying AI in ways that assist them with menial tasks rather than inhibiting learning outcomes.

Despite clear directions on when and where AI is acceptable and not acceptable, Cleve receives many AI generated student submissions that violate his guidelines.

Ingram explained that each Code of Conduct investigation begins with a conversation between her and the student about the information submitted by the professor. Canvas’ built in AI detector is often used as evidence, but the results suggest likelihood, not fact. During the conduct hearing, students often admit to their use of AI and cite not understanding the nature of the assignment or a lack of time as the reason for their violation.

In some cases, violations occur when a student is unaware of a strict no-AI policy in a specific class. For this reason, Ingram encourages professors to openly explain their AI policy.

If found responsible for an academic dishonesty violation, a possible outcome may include a $150 administrative fee and some level of corrective action, such as a referral to the Writing Center.

Joshua Reisenfeld can be reached at [email protected].